Cloud computing accelerates innovation by providing ubiquitous access to computing resources at a click of a button. However enterprises are weary of the costs associated with public cloud, especially as their usage grows.

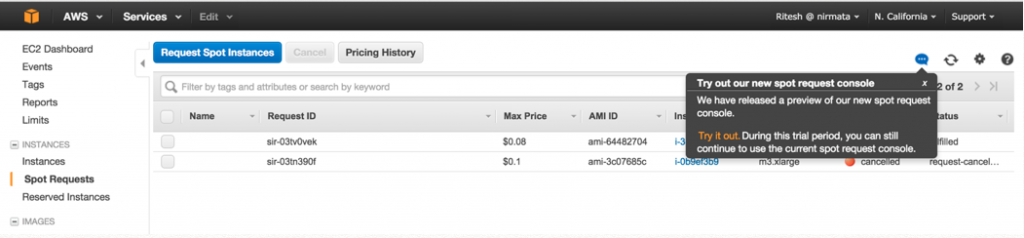

Easiest way to leverage EC2 Spot Instances with Docker and Nirmata

[fa icon="calendar'] Nov 10, 2015 12:56:18 PM / by Ritesh Patel posted in Containers, Nirmata, Continuous Delivery, Product, microservices, Engineering, cloud, DevOps, AWS, Orchestration, Docker, Cloud Architecture

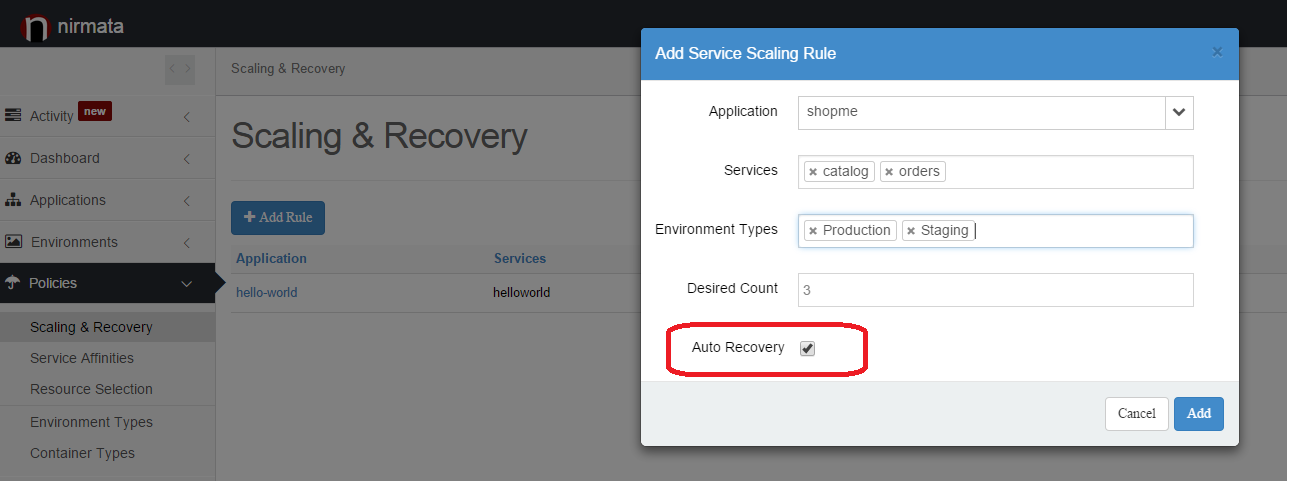

Auto-Recovery, Activity Feeds, Host Details and More

[fa icon="calendar'] Apr 13, 2015 2:26:57 PM / by Damien Toledo posted in cloud applications, Containers, Cloud native, Continuous Delivery, resiliency, Product, microservices, DevOps, Orchestration, Cloud Architecture

Nirmata is pleased to announce new features and improvements to our solution. Our focus has been on resiliency and state management:

Microservices: Five Architectural Constraints

[fa icon="calendar'] Feb 2, 2015 11:55:12 AM / by Jim Bugwadia posted in microservices, Engineering, DevOps, Cloud Architecture

Microservices is a new software architecture and delivery paradigm, where applications are composed of several small runtime services.

The real value of Cloud – its not what you think it is!

[fa icon="calendar'] Sep 15, 2014 2:50:52 AM / by Ritesh Patel posted in Business, Cloud Architecture

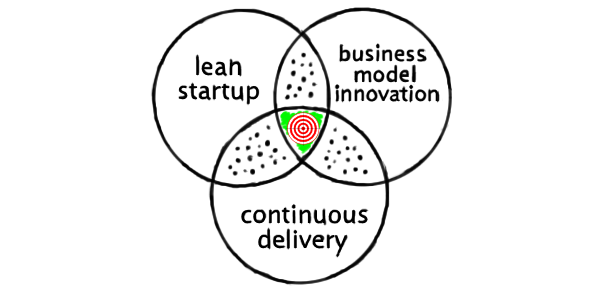

Over the last couple of years, as I spoke to various enterprise customers, the most common cloud use case that I heard was test/dev cloud. This is a great first step as enterprises become familiar with the technology. Most customers easily understand the top benefits of adopting cloud i.e. lower cost and increased IT agility. But one key advantage often ignored early on is the strategic value of adopting cloud to rapidly innovate and compete. True business innovation is achieved by delivering products and services quickly, gathering actual customer data and making incremental changes to proactively respond to customer needs.

Using containers to transform traditional applications

[fa icon="calendar'] Jul 13, 2014 5:20:12 AM / by Ritesh Patel posted in Engineering, cloud, container, Cloud Architecture

Cloud native software: key characteristics

[fa icon="calendar'] May 20, 2014 5:00:55 AM / by Jim Bugwadia posted in cloud applications, microservices, Engineering, Cloud Architecture

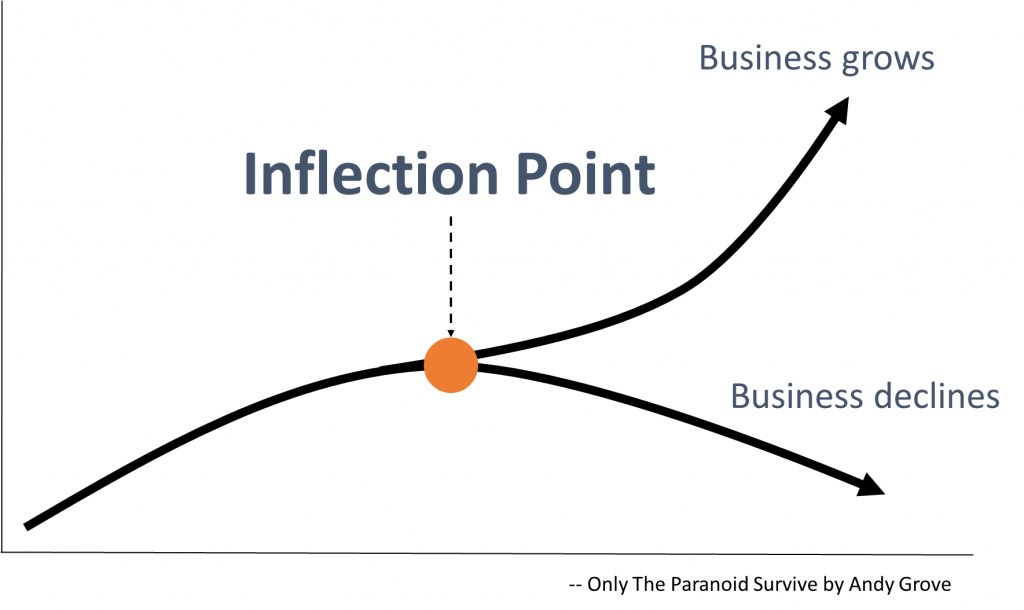

The Inflection Point in Enterprise Software

[fa icon="calendar'] Mar 13, 2014 2:07:37 AM / by Jim Bugwadia posted in Continuous Delivery, Business, Cloud Architecture

Enterprise software is at a major inflection point and how businesses act now will determine their future.

For the last few decades, enterprise software development has evolved around the client-server compute paradigm, and product delivery models where customers are responsible for software maintenance and operations.

Cloud is now the new compute paradigm, and cloud computing impacts how enterprise software is built, sold, and managed. With the cloud-based consumption model, vendors are now responsible for ongoing software maintenance and operations. Cloud computing has also led to solutions that increasingly blur the distinction between “non-tech” products and smart technology-enabled solutions, which is making software delivery a core competency for every business.

Businesses who understand these changes, and are able to embrace cloud-native software development, will succeed. Lets discuss each of these three topics in more detail:

Cloud is the new computing paradigm

Over the last few years Enterprise IT has rapidly transitioned away from building and operating static, expensive, data centers. The initial shift was to virtualize network, compute, and storage and move towards software defined data centers. But once that change occurred within organizations, it became a no-brainer to take the next step and fully adopt cloud computing. With cloud computing, enterprise IT is now delivered “as a service”. Business units interact with IT using web based self-service tools and expect rapid delivery of services.

“...cloud computing is set to become mainstream computing, period” -- Joe McKendrick’s single prediction for 2014

Cloud computing is ideal for product and software development. The key driver here is not cost savings, but business agility. Most software and IT projects fail due to inadequate requirements based on what might have worked before, a poor understanding of customer needs, and lack of data on actual adoption and usage. Cloud computing lets small teams run business experiments faster, and without large capital investments. This enables the Lean Enterprise “build-measure-learn” mindset for product development.

However, software built for the cloud is very different than software designed for traditional static data centers. Monolithic tiered systems, and integrated applications, do not do very well in the cloud. The pioneers who have fully embraced cloud computing, have also evolved to a new software architecture. This is the first driver for the inflection point in enterprise software.

Most of today's applications, and all of tomorrow's, are built with the cloud in mind. That means yesterday's infrastructure -- and accompanying assumptions about resource allocation, cost and development -- simply won't do.

-- Bernard Golden, The New Cloud Application Design Paradigm,

Consumption economics is here to stay

In their engaging book “Consumption Economics”, the authors provide compelling insights into how cloud computing and managed services are changing enterprise IT business models.

In the past, the risk of implementation for any large and complex IT project has been mostly on customer. Even when the customer engages with a vendor’s professional services team, or a system integrator, the customer pays the bill regardless of the project’s outcome.

Enterprise IT customers have also been trained to spend large amounts on the initial purchase of products, and typically pay 10-20% for annual maintenance. IT vendors have been able to mostly pass the burden of system integration, operations and maintenance, including managing upgrades and scalability, to their customers.

The advances in network availability and speed, and the rise of the internet, led to hosted service models. But it was the financial crisis in the last decade that forced vendors to aggressively compete for shrinking IT budgets. During this time, customers could not afford the risks of large IT projects with low success rates, and turned to an “as a service” delivery model. This transition not only replaced expensive CAPEX budgets with lower costs OPEX budgets, but also moved all the risk of implementation & delivery to the enterprise IT vendors.

What this means for businesses selling into the enterprise, is that they now need to invest in building systems that makes it easier, and cost effective, to operate and manage software at scale and for multiple tenants. The businesses who get good at this, will have a significant advantage over those who try and shoehorn existing systems into the cloud.

This is the second driver for the inflection point in enterprise software.

Every business is now a software business

Many businesses provide software as a part, or the entirety, of their product offering. It is clear that these businesses need to deliver software, better and faster, to win.

However, another major transition that is occurring is that software is redefining every business, even those who were previously thought of as “non-tech” companies. This is why in late 2013 Monsanto, an agriculture company, bought a weather prediction software company founded by two former Google employees in 2006. This is why GE has established a new Global Software division, located in San Ramon, California, and has invested millions in Pivotal a platform-as-a-service company. This is also why every major retailer now has a Silicon Valley office.

Businesses who can build software faster, will win. This is the third driver for the inflection point in enterprise software.

Nike’s FuelBand is both a device and a collaboration solution (that’s why Under Armour bought MapMyFitness). Siemens Medical’s MRI machines are both a camera (of sorts) and a content management system Heck, even a Citibank credit card is both a payment tool and an online financial application. Any company that is embracing the age of the customer is quickly learning that you can’t do that without software. -- James Staten, Forrestor

What you can do

Today, for most businesses a cloud strategy is all about delivering core IT services like compute, network, and storage faster to their business teams. This is an important first step, but not enough.

As a business, your end goal is to deliver products and services faster. This translates to being able to run business experiments efficiently, and being able to develop and operate software faster, at scale, and for multiple tenants.

To do this, your business needs to adopt a strategy to embrace cloud-native software. This means a move away from developing integrated, monolithic, 3-tiered software systems, that have served us well in the client-server era, and towards composable cloud-native applications. Like with any paradigm shift you can start with an pilot project, learn, and grow from there.

In this post, I mentioned cloud-native software a few times but did not discuss what exactly that is. While that is rapidly evolving, there common patterns and best-practices in place. In my next post, I will discuss some of these and how you can transform current software to cloud native.

References

- Joe McKendrik, My One Big Fat Cloud Computing Prediction for 2014, http://www.forbes.com/sites/joemckendrick/2013/12/19/my-one-big-fat-cloud-computing-prediction-for-2014/

- Bernard Golden, http://www.cio.com/article/746597/The_New_Cloud_Application_Design_Paradigm

- Consumption Economics: The New Rules of Tech, http://www.amazon.com/Consumption-Economics-The-Rules-Tech/dp/0984213031

- James Staten, Forrestor, http://blogs.forrester.com/james_staten/14-03-17-how_is_an_earthquake_triggered_in_silicon_valley_turning_your_company_into_a_software_vendor

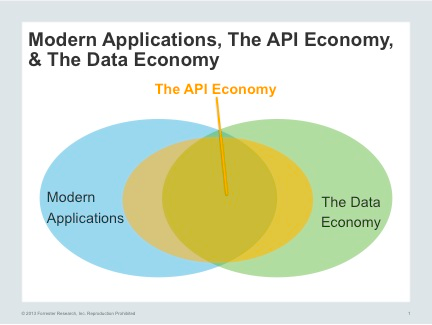

Apps and APIs fuel the digital economy

[fa icon="calendar'] Nov 13, 2013 1:14:25 AM / by Ritesh Patel posted in API, Business, Cloud Architecture

Last week, at the “I Love APIs” conference hosted by Apigee, It was amazing to see companies finally embracing APIs and to learn about how they are monetizing APIs. Technology companies that have grown up in the digital age have long adopted “API first” strategy where API is the primary external interface; and other interfaces, i.e. web and mobile use the API. Non-technology companies (or “digital immigrants” as Chet Kapoor, CEO of Apigee referred to these companies using a term coined by Mark Pensky) have now started to realize the potential of APIs.

Just three years ago, I was having a hard time at my previous employer convincing an executive about the merits of launching an API program. Now, the questions is no longer “Do we need API’s?” but “When/how can we get them?”

At the conference, it was extremely interesting to understand various companies’ motivations for launching an API program. In case of Walgreens, it was the opportunity to reach audiences beyond in-store customers that motivated the development of QuickPrints API. Pearson group opened up their massive content via APIs to allow developers build creative mashups and for OnStar APIs are a natural evolution as the world move towards connected vehicles. Even Kaiser Permanente kicked off its API program earlier this year. In each case, the API users were internal/external developers or technology partners.

It is clear that we are well into the digital economy where applications and APIs are the new currency and developers are a key customer/partner.

At the conference, several companies shared their experience launching API programs including, of course, the challenges they faced. While developing APIs seems straightforward, directly jumping into API development without a cohesive strategy has inherent risks. Companies that don’t have experience with APIs and developer programs should: adopt an iterative process by delivering a small set of APIs, interact with the developers, and incorporate feedback while learning from the process.

Getting developers attention and interest was identified as a key challenge.

Just publishing APIs and hoping developers will come doesn’t work. Using targeted marketing techniques as well as providing appropriate monetary incentives is required. Companies also need to ensure that their APIs are well documented, easy to use, and follow best practices. A few presenters also warned against just putting an API around existing applications. While most companies end up taking this approach for ‘time to market’ reasons, without adequate precautions, this approach can be catastrophic in case the API usage grows unexpectedly.

Companies serious about API program, need to consider investing in the right application architecture, one that is resilient and can scale.

Also last week, Cisco provided additional boost to the application economy by announcing Application-centric infrastructure (ACI). In my opinion, most applications need not really know much about the underlying network as long as it provides connectivity and the desired level of quality of service.

This week promises to be an exciting one as Amazon AWS re:Invent kicks of on Tuesday. Amazon pioneered the “API first” movement with Jeff Bezos’s mandate to developers to expose their data and functionality through service interfaces only. AWS has really jump started the application explosion but we ain't seen nothing yet!

Next few years will bring exciting innovation as more companies actively participate in the digital economy.

At Nirmata, our mission is to help developers and organizations rapidly innovate and accelerate their journey to the cloud. Our cloud services platform has been designed with “API first” approach to deliver composable, cloud ready, next generation applications. Below is a short explainer video. Let us know what you think and how we can help you thrive in the digital economy.

-Ritesh Patel

Follow us on Twitter @NirmataCloud

REST is not about APIs, Part 2

[fa icon="calendar'] Nov 12, 2013 1:12:40 AM / by Jim Bugwadia posted in REST, Engineering, Cloud Architecture

Most articles on REST seem to focus only on APIs. This view misses several key benefits of a RESTful system. The true potential of REST is to build systems as scalable, distributed, resilient, and composable as the Web. Yes, APIs play a role in this but by themselves are not enough.

In Part 1 of this article I described the 6 architectural constraints, 4 interface constraints, and 3 architectural components defined in the REST architectural style. I also detailed why the API centric view of REST merely scratches the surface.

In this final part, I will show how to apply the full REST architectural style, discuss how the benefits are achieved, and provide a way to incrementally migrate to an RESTful architecture.

Applying the basic constraints

Lets try and build a conceptual system from the REST constraints and elements. Our first attempt uses the following constraints:

-

Client – Server: separation of client and server roles

-

Stateless: externalize all data & state (including request state) from the server. We can choose some combination of NoSQL, SQL, or distributed cache data management solutions.

-

Cache: add a cache component to the client, as well as the server

-

Uniform Interface: provide a RESTful API at the server

-

Layered System: the application and data tier are separated into layers

Since we made the application server stateless, we can now add a gateway component for common request management, and scale server instances up & down behind it. The gateway could be implemented using a load balancer or a reverse proxy, and itself can scale up or down as needed. Based on the choice of data management solution, we can also replace the single server with a cluster of data management servers.

We now have a classic tiered architecture and each tier is elastic. This may be good enough for some applications. In fact most current applications use this, or a close variant of this, tiered architecture.

However, this architecture will become quickly difficult to scale and manage in a cloud. Here are a few reasons why:

-

The application server is monolithic; scaling requires deploying the entire application as a separate instance.

-

The failure domain is the entire application. A single bug, or issue, can impact the full application.

-

A single type of data tier is assumed for the entire application. In reality, different modules will need different types of data management services.

-

Incremental changes, that impact only a small set of functions, are not possible to test and manage

To solve these problems we need to further apply the REST constraints on the application tier itself.

The next step would be to further decompose the application server, into multiple RESTful services. Lets say we had two top-level modules in our application, we can make each of them a separate service. A service is now the fundamental unit of deployment and management. Each service is stateless and has its own uniform interface. Each service has its own data cache and storage tier, and these can use different types of data management technologies based on the requirements. A service can also be scaled up or down independently of other services.

The tradeoff with this architecture is that now additional services will be needed to manage the inter-service communication. We can add a service registry to allow services to lookup services. The gateway also needs more intelligence for routing requests and finding services.

Case Studies

Businesses adopting cloud computing have also adopted a RESTful distributed cloud services architecture. Here are a few examples:

- Netflix transitioned from monolithic tiers to a cloud services approach. They have open-sourced their service registry (Eureka), gateway (Zuul) and several other components. Their engineering blog and the Netflix OSS GitHub repository are great resources.

- LinkedIn made the transition from monolithic application to distributed services. This InfoQ presentation from Jay Krepps is a great overview.

- Twitter switched from what they called a “monorails” application to distributed JVM based services. Jeremy Cloud discusses this in an InfoQ presentation, “Decomposing Twitter”.

- Amazon has not directly presented this, but there are reports of their architecture based on internal APIs and services.

- Yammer also uses a distributed services based approach, and has open sources a tool called DropWizard to aid in building RESTful Java services.

Applying to Existing Systems

If you are like most developers, it may seem like a stretch to even consider making bold changes to your system to move towards a RESTful architecture. However, the fine-grained services approach has another key advantage. You can leverage the emphasis on modularity to incrementally transform a monolithic application to a cloud services architecture.

You can select an existing module, or when its time to build a new module, built it as a library which can be run as part of your monolithic application or as a separate service.

Summary

If your goal is to build an elastic & composable system - like the internet, you need to learn more than the ‘uniform interface’ constraint in REST. In this article we applied all five required REST constraints, and used different architectural elements to build an elastic architecture where an application is composed of multiple RESTful services. This architecture yields several benefits, and most importantly enables continuous delivery of software. Now small, autonomous DevOps teams can build and manage individual services and get direct feedback on usage! Enterprises can incrementally adopt this architecture by migrating modules in a monolithic application.

Netflix OSS, meet Docker!

[fa icon="calendar'] Oct 16, 2013 3:01:43 AM / by Ritesh Patel posted in Containers, Engineering, AWS, Cloud Architecture

Background

At Nirmata, we are building a cloud services platform to help customers rapidly build cloud ready applications. We believe that next generation of cloud applications will be composed from stateless, loosely-coupled, fine-grained services. In this architecture, each service can be independently developed, deployed, managed and scaled. The Nirmata Platform, itself, is built using the same architectural principles. Such an architecture requires a set of core, infrastructure services. Since Netflix has open sourced their components [1] we decided to evaluate and extend them. The components that best meet our needs were Eureka (a registry for inter-service communication), Ribbon (a client-side load balancer and SDK for service to service communication), Zuul (a gateway service) and Archaius (a configuration framework). In a few days we had the Netflix OSS components working with our services and things looked good!

Challenges in dev/test

Our application now was now made up of six independent services and we could develop and test these services locally on our laptops fairly easily. Next we decided to move our platform to Amazon AWS for testing and integrate with our Jenkins continuous integration server. This required automation to deploy these various services in our test environment. We considered various options such as creating and launching AMI’s, or using Puppet/Chef. But these approaches would require each service to be installed on a separate EC2 instance and the number of EC2 instances would quickly grow as we add more services. Being a startup, we started looking for more efficient alternatives for ourselves as well as our customers..

Why Docker

This is when we started looking at Docker [2]. We knew about Linux containers [3] but didn’t feel we could invest time and effort to directly use them. However, Docker made using Linux containers easy! With a few hours of prototyping with Docker we were able to get an application service up and running. Also, once the Docker images were created, running them was a snap, and unlike launching VM instances there was hardly any startup time penalty. This meant that we could now launch our entire application, very quickly on a single medium EC2 instance vs launching multiple micro or small instances. This was amazing!

Using Netflix OSS with Docker

The next step was to get all our services, including the Netflix OSS services running in Docker (v0.6.3) containers. So, first we created the base image for all our services by installing jdk 7 and tomcat 7. For test environment, we wanted to make sure that the base container image can be use for any service, including Netflix OSS services so we added a short startup script to the container base image to copy the service war file from a mounted location, setup some environment variables (explanation later) and start the tomcat service.

#!/bin/bash

echo Starting $1 on port $2

#Copy the war file from mounted directory to tomcat webapps directory

if [ $1 ]

then

cp /var/lib/webapps/$1.war /var/lib/tomcat7/webapps/$1.war

fi

#Add the port to the JVM args

if [ $2 ]

then

echo "export JAVA_OPTS=\"-Xms512m -Xmx1024m -Dport.http.nonssl=$2 -Darchaius.configurationSource.additionalUrls=file:///home/nirmata/dynamic.properties\"" >> /usr/share/tomcat7/bin/setenv.sh

else

echo "export JAVA_OPTS=\"-Xms512m -Xmx1024m -Dport.http.nonssl=8080\"" >> /usr/share/tomcat7/bin/setenv.sh

fi

#Setup dynamic properties

echo "eureka.port=$2" >> /home/nirmata/dynamic.properties

if [ $3 ]

then

echo "eureka.serviceUrl.defaultZone=$3" >> /home/nirmata/dynamic.properties

echo "eureka.serviceUrl.default.defaultZone=$3" >> /home/nirmata/dynamic.properties

fi

echo "eureka.environment=" >> /home/nirmata/dynamic.properties

if [ $4 ]

then

echo "default.host=$4" >> /home/nirmata/dynamic.properties

fi

service tomcat7 start

tail -F /var/lib/tomcat7/logs/catalina.out

Following are some key considerations in deployed Netflix OSS with Docker:

Ports – With Docker, ports used by the application in the container need to be specified when launching a container so that the port can be mapped to the host port. Docker automatically assigns the host port. On startup various Nirmata services register with the service registry, Eureka. Service running within a Docker container needs to register using the host port so that other services can communicate with it. To solve this, we specified the same host and container port when launch a container. For example, Eureka would be launched using port 8080 on the container as well as the host. One challenge this introduced was the need to automatically configure Tomcat port for various services. This is easily done by specifying the port as an environment variable and modifying the server.xml file to use the environment variable instead of a hard coded value.

IP Address – Each application typically registers with Eureka using its IP address. We noticed that our services running in Docker containers were registering with Eureka using the loopback (127.0.0.1) IP instead of the container IP. This required a change in the Eureka client code to use the container virtual NIC IP address instead of the loopback IP.

Hostname – Another challenge was hostname resolution. Various Nirmata services register with Eureka using the hostname and IP address but Ribbon just used the hostname to communicate with the services. This proves problematic as there is no DNS service available to resolve the container hostname to IP address. For our existing deployment since Zuul is the only service that communicates with the various other services, we were able to get past this issue by using the same hostname as the Docker host for various service containers (other than the Zuul container). This is not an elegant solution by any means and may not work for all scenarios. My understanding is that Docker 0.7.0 will address this problem with the new links feature.

Dependencies – In our application, there are a few dependencies like each service needs to know the database URL and the Eureka server URL. Docker container IP address is assigned at launch and we couldn’t use the hostname (as described above) to inject this information to our services. We addressed this by launching our services in a predetermined order and by passing in the relevant information at runtime to our container startup script. We used Archaius to load runtime properties dynamically from a file url.

To bring it all together we automated the deployment of our application by developing a basic orchestrator using the docker-java client library. Now we can easily trigger the deployment of entire application from our Jenkins continuous integration server within minutes and test our services on AWS.

Whats next..

The combination of Netflix OSS and Docker makes it really easy to develop, deploy and test distributed applications in the cloud. Our current focus is on building a flexible, application-aware orchestration layer that can make deploying & managing complex applications using Docker that addresses some of the current challenges with using Docker. We would love to hear how you are using Docker, and potentially collaborate on Docker & Netflix OSS related projects.

—

Ritesh Patel

Follow us on Twitter @NirmataCloud

References

[1] Netflix OSS, http://netflix.github.io/

[2] Docker, http://www.docker.io/

[3] LXC, http://en.wikipedia.org/wiki/LXC