Accelerating DevOps adoption using containers

[fa icon="calendar'] Mar 9, 2016 4:09:59 PM / by Ritesh Patel posted in cloud applications, Containers, Business, cloud architecure, microservices, Engineering, DevOps, Other

Dynamic Resource Management for Application Containers

[fa icon="calendar'] Jan 5, 2016 6:17:50 PM / by Damien Toledo posted in metapod, cloud applications, Containers, vmware, vsphere, cisco, vcloud, Product, microservices, openstack, cloud, auto-scaling, AWS, cloud platform, Docker

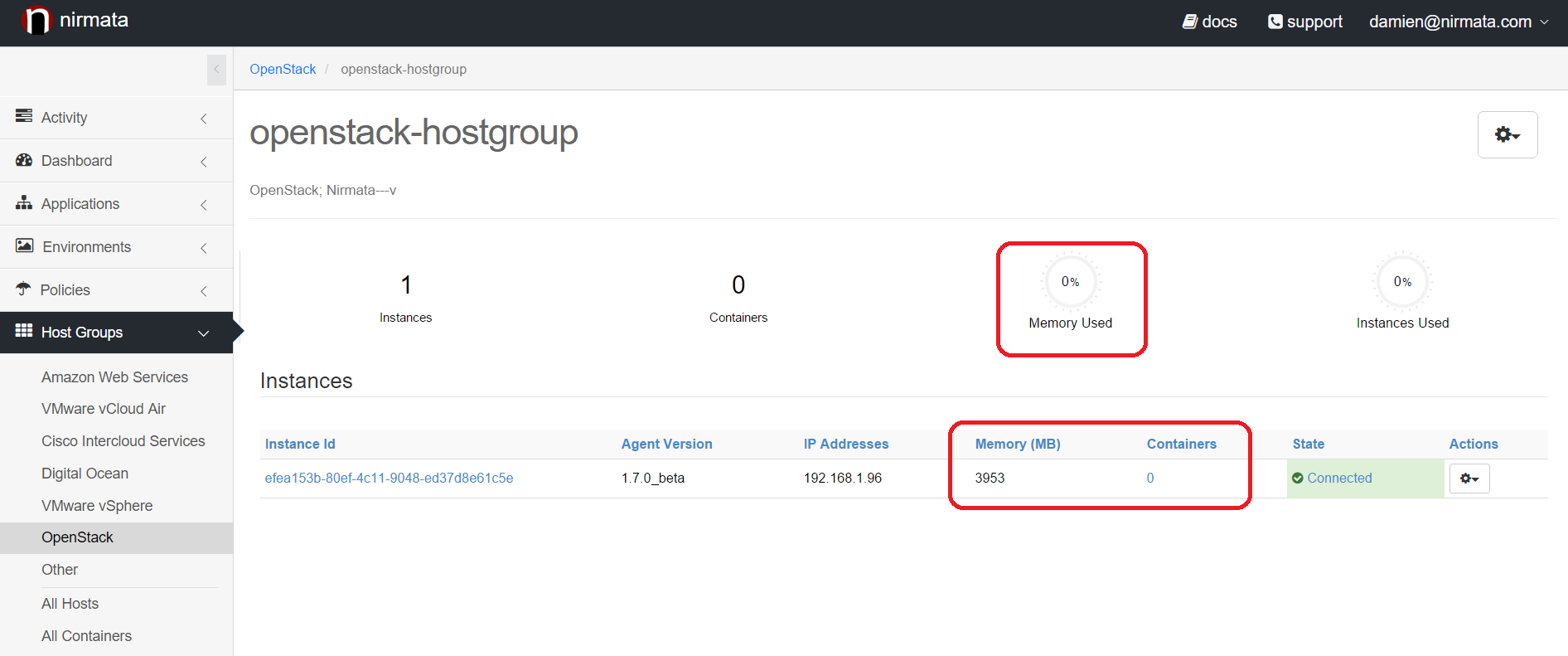

Nirmata provides multi-cloud container services for enterprise DevOps teams. Nirmata is built to leverage Infrastructure as a Service (IaaS) providers, for dynamic allocation and deallocation of resources. In this post, I will discuss a Nirmata feature that helps you optimize your cloud resource usage: Host Auto-Scaling.

Automated deploys with Docker Hub and Nirmata

[fa icon="calendar'] May 31, 2015 11:40:39 AM / by Ritesh Patel posted in cloud applications, Nirmata, Continuous Delivery, Product, Docker Hub, Continuous Integration, Docker

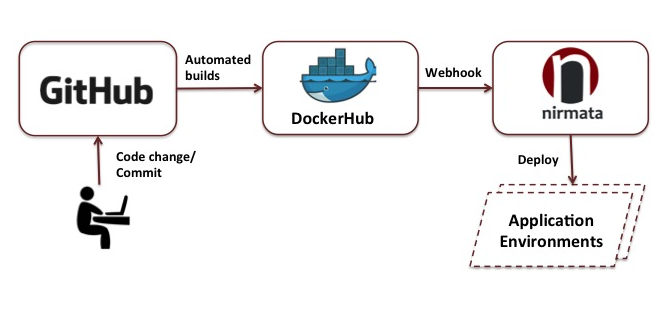

As companies strive to attain achieve software development agility, they are looking to automate each phase of their software development pipeline. The ultimate goal is to fully automate the deployment of code to production, triggered when a developer checks in a fix or a feature. Companies such as Netflix have built extensive tooling to enable their developers to achieve this level of sophisticated automation [1]. In this post, I will describe how deployments of containerized applications can be completely automated with GitHub, Docker Hub and Nirmata.

Docker Data Containers with Nirmata

[fa icon="calendar'] May 10, 2015 10:01:16 PM / by Ritesh Patel posted in cloud applications, Containers, Product, cloud, Docker

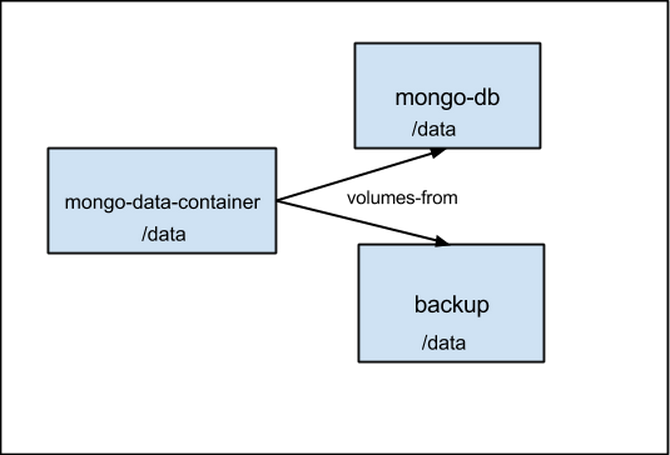

An exciting thing about Docker is the number of interesting use cases that emerge as developers adopt containers in their application development and operations processes.

Auto-Recovery, Activity Feeds, Host Details and More

[fa icon="calendar'] Apr 13, 2015 2:26:57 PM / by Damien Toledo posted in cloud applications, Containers, Cloud native, Continuous Delivery, resiliency, Product, microservices, DevOps, Orchestration, Cloud Architecture

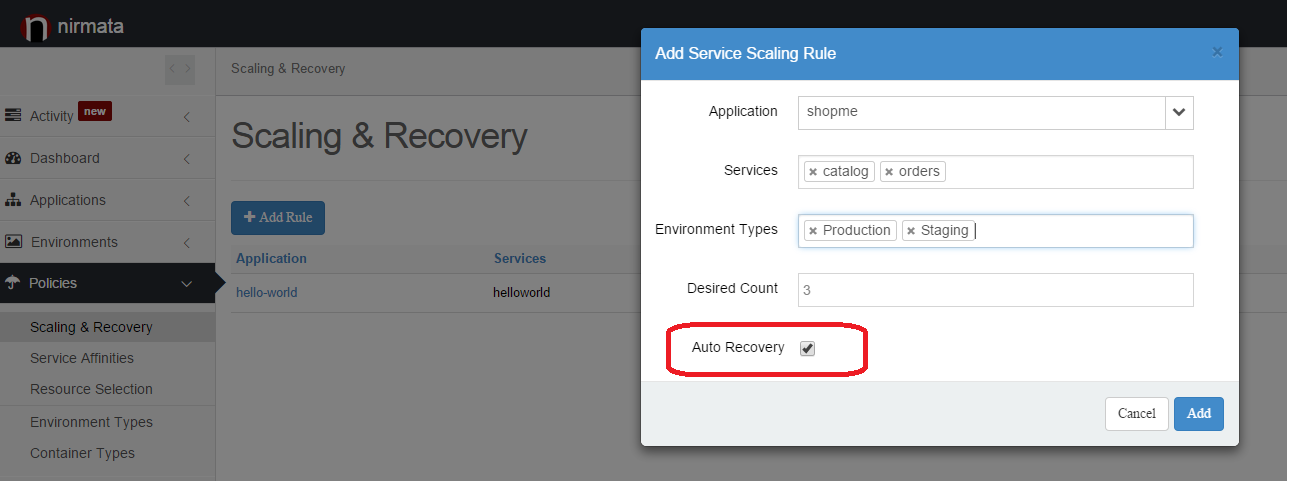

Nirmata is pleased to announce new features and improvements to our solution. Our focus has been on resiliency and state management:

Private Cloud Container Orchestration using Nirmata

[fa icon="calendar'] Mar 11, 2015 3:55:05 PM / by Damien Toledo posted in cloud applications, vsphere, Product, openstack, Orchestration

Private Cloud Container Orchestration using Nirmata

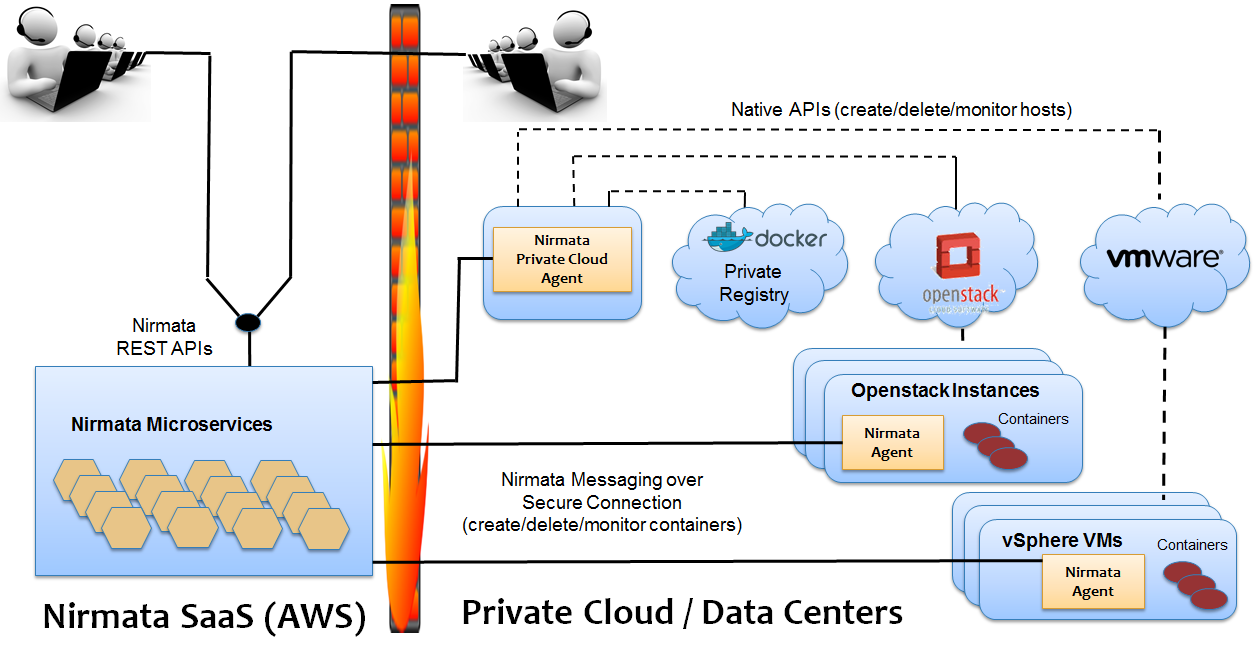

Nirmata has full support for private clouds as part of its Microservices Operations and Management solution. In this post, I will provider some insights into the architecture of this functionality, and walk you through the setup in four easy steps.

Nirmata users can securely manage VMware and OpenStack clouds and Docker Image Registries, in their data center. To connect their Private Clouds, users’ need to run the Nirmata Private Cloud Agent, on a system within their data center that has network connectivity to their cloud management system (e.g. VMware’s vCenter) and/or a private Docker Image Registry. Once the Nirmata Private Cloud Agent is connected, users can then securely provision Host Groups and Image Registries in Nirmata.

Deploy applications, not containers!

[fa icon="calendar'] Feb 9, 2015 4:52:43 PM / by Ritesh Patel posted in cloud applications, Containers, Product, DevOps

Cloud Applications: Migrate or Transform?

[fa icon="calendar'] Dec 18, 2014 2:10:40 AM / by Ritesh Patel posted in cloud applications, Continuous Delivery, Engineering

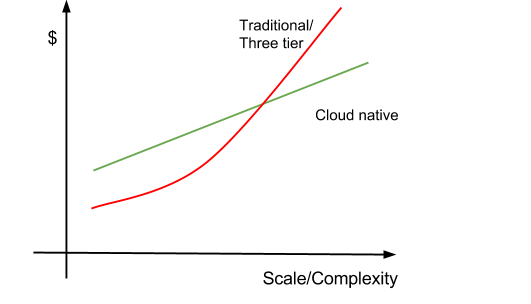

Cloud computing is forcing application developers to think differently about application architecture. Just as client side developers faced a huge paradigm shift from desktop to mobile application development, server side developers are experiencing a similar shift as they develop applications for the cloud. Deploying an application that is not architected for cloud (i.e. cloud native) cannot harness any benefits of the underlying cloud infrastructure and will result in increased operational costs in the long run. For developers building new cloud applications it is absolutely critical to get the architecture right the first time and avoid costly redesigns.

Orchestration holds the key to enterprise adoption of containers

[fa icon="calendar'] Sep 18, 2014 12:32:45 AM / by Ritesh Patel posted in cloud applications, Containers, Engineering

According to results of a survey released a couple weeks ago, Docker is the second most popular open source project, behind OpenStack. Thanks to Docker, containers are fast becoming the de-facto delivery vehicle for cloud based applications. It is amazing that in just over a year, Docker has created a new verb – ‘dockerize’ i.e. containerize applications. In case you don’t already know, containers are extremely lightweight and multiple containers can run on a single host or VM. Docker makes it easy to package applications into containers and provision them via CLI or API. Fast start times make containers an excellent choice for dynamic cloud based applications. Developers have taken the lead in adoption of Docker and enterprises large and small are taking notice.

While it is extremely easy for a developer to get started, adoption of Docker by enterprises requires significant tooling for production deployments. Since DockerCon earlier this year, several web scale companies have open sourced their DevOps tools for Docker, with Google’s Kubernetes getting the most mindshare. Most of these tools are focussed on application deployment with container orchestration as a core capability.

Lets take a look at what orchestrating containers is all about. Depending on your application(s), many or all of the following capabilities will be required to orchestrate containerized applications.

Flexible Resource Allocation

Orchestration needs to be flexible enough to adapt to application needs and not the other way around. Applications components (services) may have varying needs from the underlying infrastructure so orchestration needs to take into account application requirements and place a container on the appropriate host. For example, database containers may need to be placed on hosts with high performance storage whereas other containers can be placed elsewhere. Additionally, when placing multiple containers on same host, available resources (memory, cpu, storage etc) need to be considered to ensure that containers are not starved of resources. An excellent example of resource based orchestration is Mesos, which matches tasks to resource offers. In case of distributed, service oriented applications, any inter-service dependencies need to be taken into account while deploying applications. Also, containers that use same host ports need to be placed on different hosts to avoid port collisions.

Resiliency

Orchestration should ensure applications are deployed in a resilient manner. Multiple instances of the same service should be deployed on different hosts, possibly in different zones to ensure high availability. Deploying new containers for a service in case an existing container fails ensures that the application stays resilient. In case the underlying VM or host fails, orchestrator should detect the failure and redistribute the containers to other hosts.

Scaling

Cloud native applications are dynamic and need to scale up or scale down on demand. As a result, manual as well as automatic scaling of containers is mandatory. The challenge lies in ensuring that underlying resources (host, cpu, memory etc) are available when new containers are provisioned and in case resources are not available, they need to be automatically provisioned based on preconfigured profiles. Even better, would be to provision these resources when the utilization of existing resources reaches a predefined threshold to ensure availability of capacity.

Isolation

Depending on the environment in which an application is being deployed, isolation needs may vary. For dev/test environments, it may be perfectly fine to deploy multiple containers or instances of an application on the same set of hosts whereas staging and production need to be completely isolated environments. Containers running within VMs provide some level of isolation and security but as multiple containers are launched on a VM, additional ports need to be opened up in the security group and when these containers stop, ports need to be closed. This may also require networking and firewall policies to be configured dynamically.

Visibility

Orchestration of an application is not just a one time event but an ongoing task. Visibility into various application and infrastructure level statistics and analytics can help make informed decisions when orchestrating containers. Intelligent placement of containers can minimize ‘noisy neighbor’ issues. By understanding application behavior and trends, resource utilization can be further optimized.

Infrastructure Agnostic

Using containers, applications can be completely decoupled from the underlying infrastructure. Applications need not worry about where the underlying resources come from as long as the resources are available. Orchestration tool needs to ensure that necessary resources are always available based on predefined policies or other constructs.

Multi-cloud

Portability across clouds is another key benefit of using containers and with hybrid cloud applications becoming more common, orchestration of containerized applications across clouds another requirement. Many enterprises end up using multiple clouds either for cost savings or due to regional availability of the cloud provider, making multi cloud orchestration a must have.

Integrations

When orchestrating an application, additional tasks may need to be performed. For example, cache warm-up, gateway or proxy configuration etc. These tasks may vary depending on the deployment type (dev/test/staging/production). Ability to integrate orchestration with external tools and services can help automate the entire workflow.

Besides these capabilities, any enterprise focussed solution would need basic capabilities such as Role Based Access Control, Collaboration, Audit Trail, Reporting etc.

Summary

While there are several open source tools that try to address some of the above mentioned requirements, we have not seen a comprehensive solution targeted at enterprise DevOps teams. We understand that enterprises want flexibility and agility in delivering their applications but do not want to compromise on control and visibility. At Nirmata we truly believe that containers are the future of application delivery and our goal is to empower enterprise DevOps teams to accelerate innovation.

-Ritesh Patel

Follow us on Twitter @NirmataCloud

Are Containers Part of Your IT Strategy?

[fa icon="calendar'] Aug 15, 2014 5:50:22 AM / by Jim Bugwadia posted in cloud applications, Containers, Business, microservices

In 2002, VMware introduced their Type 1 hypervisor which made server virtualization mainstream and eventually a requirement for all enterprise IT organizations. Although cost savings are often cited as a driver, virtualization became a big deal for businesses as it allows continuous IT services. Using virtualization, IT departments could now offer zero-downtime services, at scale, and on commodity hardware.

In 2014, Docker, Inc. released Docker 1.0. Docker provides efficient image management for linux containers, and provides a standard interface that can be used to solve several problems with application delivery and management.

Much like VMware made virtualization mainstream, Docker is rapidly making containerization mainstream. In this post I will discuss four reasons why you should consider making containerization part of your business strategy.

Continuous Delivery of Software

Virtualization enabled the automation and standardization of infrastructure services. Containerization enables the automation and standardization of application delivery and management services (a.k.a. platform services).

Faster software delivery leads to faster innovation. If your business delivers software applications as part of its product offerings, the speed at which your teams can deliver new software features and bug fixes provides key competitive differentiation.

Virtualization, service catalogs, and automation tools can provide self-service, and on-demand, Virtual Machines, networks, and storage. But rapid access to Virtual Machines and infrastructure is not sufficient to deliver applications. A lot of additional tooling is required to deliver applications in a consistent and infrastructure agnostic manner.

Application Platform and Configuration Management solutions have tried to address this area, but have not succeeded en mass, as until recently there was no standard way to define application components. Docker addresses this gap, and provides a common and open building block for application automation and orchestration. This fundamentally changes how enterprises can build and deliver platform services.

Another fast growing trend is that cloud applications are being written using a Microservices architectural style, where applications are composed of multiple co-operating fine-grained services (http://bit.ly/1zPPzQH). Containers are the perfect delivery vehicle for microservices. Using this approach, your software teams can now independently version, test, and upgrade individual services. This avoids large integration and test cycles as the focus is on making incremental, but frequent, changes to the system.

Application Portability

Businesses are adopting cloud computing for infrastructure services. Public cloud providers are continuously expanding their offerings and are also constantly reducing their pricing. Some cloud providers may have better regional presence, and others may offer specialized services for certain application types. And, at a certain spend, and for some application types, private cloud remains an attractive option. For all of these reasons, it makes sense to avoid being locked in to a single cloud provider.

Containerization, allows application components to be portable to any cloud that offers base operating system that can run the container. Using containers avoids deep lock-in to a particular cloud provider, or a platform solution, and enables application runtime portability across public and private cloud.

DevOps Culture

The DevOps movement builds on Agile software development, where small incremental releases are favored to long release cycles, and the Lean Enterprise philosophy, where constant customer feedback loops are used to foster a culture of innovation.

With DevOps, developers also responsible for the operations of code. As Adrian Cockroft explains (http://slidesha.re/1v540RL), the traditional definition of “done” was when the code was released to production. Now, “done” is when the code is retired from production.

However, DevOps for a startup delivering a single web application will be very different than DevOps for an enterprise delivering several applications. In larger environments, and for more complex applications, a common platform team is required to service multiple DevOps teams.

Containerization, using Docker, provides a great separation of control across DevOps and platform concerns. A container image becomes the unit of delivery and versioning. DevOps teams can focus on building and delivering containers, and the platform team build automation around operating the containerized applications across public and private clouds, as well as shared services used by multiple DevOps teams.

Cost Savings

Virtualization allows several Virtual Machines to run on large physical servers, which can lead to significant consolidation and cost savings. Similarly, containerization allows several application services to run on a single virtual or physical machine, or on a large pool of virtual or physical machines.

Container orchestration solutions can provide policies to packing different types of services. This is exactly what Platform-as-a-Service (PaaS) vendors, like Heroku, have been doing under the covers. Containerization orchestration tools, that are built on open technologies like Docker, can now make this transparent to end users, and pass along the cost savings to their users.

Current Challenges

Recently, perhaps influenced by the buzz around Docker, Google announced ( http://bit.ly/UY030m) that all of their applications, from Search to Gmail, run in Linux Containers. However, Google and others have spent several years building and fine-tuning platforms and tools around containers, and until recently have treated these tools as a competitive advantage.

For mainstream adoption of containerization, better general purpose container orchestration and management tools are required. Application networking and security also remain areas of key development. Finally, the options for non-Linux applications are currently limited.

Summary

Infrastructure virtualization enabled continuous IT services. Containerization enables continuous application delivery.

Containerization also enables application portability, and can be a key architectural building block for cloud native applications. Once an application is containerized, the containers can be run on a pool of virtual or physical machines, or on Infrastructure-as-a-Service based public clouds.

For new applications, packaging the application components as containers should be strongly considered. Just as with virtualization, the list of reasons why not to containerize are already rapidly shrinking. Another case where containerization can help, is to transform traditional applications that now need to be delivered as software-as-a-service (http://bit.ly/1pVaeeK).

If your business delivers software, you can leverage containerization to develop and operate software more efficiently and in a highly automated fashion across public and private clouds.