According to results of a survey released a couple weeks ago, Docker is the second most popular open source project, behind OpenStack. Thanks to Docker, containers are fast becoming the de-facto delivery vehicle for cloud based applications. It is amazing that in just over a year, Docker has created a new verb – ‘dockerize’ i.e. containerize applications. In case you don’t already know, containers are extremely lightweight and multiple containers can run on a single host or VM. Docker makes it easy to package applications into containers and provision them via CLI or API. Fast start times make containers an excellent choice for dynamic cloud based applications. Developers have taken the lead in adoption of Docker and enterprises large and small are taking notice.

While it is extremely easy for a developer to get started, adoption of Docker by enterprises requires significant tooling for production deployments. Since DockerCon earlier this year, several web scale companies have open sourced their DevOps tools for Docker, with Google’s Kubernetes getting the most mindshare. Most of these tools are focussed on application deployment with container orchestration as a core capability.

Lets take a look at what orchestrating containers is all about. Depending on your application(s), many or all of the following capabilities will be required to orchestrate containerized applications.

Flexible Resource Allocation

Orchestration needs to be flexible enough to adapt to application needs and not the other way around. Applications components (services) may have varying needs from the underlying infrastructure so orchestration needs to take into account application requirements and place a container on the appropriate host. For example, database containers may need to be placed on hosts with high performance storage whereas other containers can be placed elsewhere. Additionally, when placing multiple containers on same host, available resources (memory, cpu, storage etc) need to be considered to ensure that containers are not starved of resources. An excellent example of resource based orchestration is Mesos, which matches tasks to resource offers. In case of distributed, service oriented applications, any inter-service dependencies need to be taken into account while deploying applications. Also, containers that use same host ports need to be placed on different hosts to avoid port collisions.

Resiliency

Orchestration should ensure applications are deployed in a resilient manner. Multiple instances of the same service should be deployed on different hosts, possibly in different zones to ensure high availability. Deploying new containers for a service in case an existing container fails ensures that the application stays resilient. In case the underlying VM or host fails, orchestrator should detect the failure and redistribute the containers to other hosts.

Scaling

Cloud native applications are dynamic and need to scale up or scale down on demand. As a result, manual as well as automatic scaling of containers is mandatory. The challenge lies in ensuring that underlying resources (host, cpu, memory etc) are available when new containers are provisioned and in case resources are not available, they need to be automatically provisioned based on preconfigured profiles. Even better, would be to provision these resources when the utilization of existing resources reaches a predefined threshold to ensure availability of capacity.

Isolation

Depending on the environment in which an application is being deployed, isolation needs may vary. For dev/test environments, it may be perfectly fine to deploy multiple containers or instances of an application on the same set of hosts whereas staging and production need to be completely isolated environments. Containers running within VMs provide some level of isolation and security but as multiple containers are launched on a VM, additional ports need to be opened up in the security group and when these containers stop, ports need to be closed. This may also require networking and firewall policies to be configured dynamically.

Visibility

Orchestration of an application is not just a one time event but an ongoing task. Visibility into various application and infrastructure level statistics and analytics can help make informed decisions when orchestrating containers. Intelligent placement of containers can minimize ‘noisy neighbor’ issues. By understanding application behavior and trends, resource utilization can be further optimized.

Infrastructure Agnostic

Using containers, applications can be completely decoupled from the underlying infrastructure. Applications need not worry about where the underlying resources come from as long as the resources are available. Orchestration tool needs to ensure that necessary resources are always available based on predefined policies or other constructs.

Multi-cloud

Portability across clouds is another key benefit of using containers and with hybrid cloud applications becoming more common, orchestration of containerized applications across clouds another requirement. Many enterprises end up using multiple clouds either for cost savings or due to regional availability of the cloud provider, making multi cloud orchestration a must have.

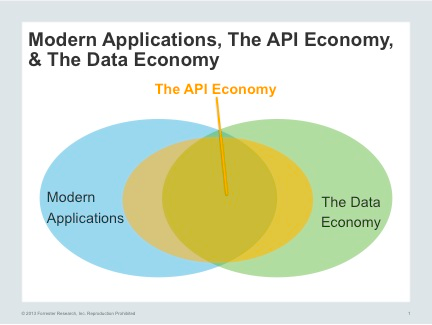

Integrations

When orchestrating an application, additional tasks may need to be performed. For example, cache warm-up, gateway or proxy configuration etc. These tasks may vary depending on the deployment type (dev/test/staging/production). Ability to integrate orchestration with external tools and services can help automate the entire workflow.

Besides these capabilities, any enterprise focussed solution would need basic capabilities such as Role Based Access Control, Collaboration, Audit Trail, Reporting etc.

Summary

While there are several open source tools that try to address some of the above mentioned requirements, we have not seen a comprehensive solution targeted at enterprise DevOps teams. We understand that enterprises want flexibility and agility in delivering their applications but do not want to compromise on control and visibility. At Nirmata we truly believe that containers are the future of application delivery and our goal is to empower enterprise DevOps teams to accelerate innovation.

-Ritesh Patel

Follow us on Twitter @NirmataCloud