Jim Bugwadia

Recent Posts

Organizing Enterprise DevOps

[fa icon="calendar'] Oct 18, 2014 9:09:45 PM / by Jim Bugwadia posted in Engineering

Organizing Enterprise DevOps

[fa icon="calendar'] Oct 18, 2014 9:09:45 PM / by Jim Bugwadia posted in Continuous Delivery, Engineering, DevOps

Are Containers Part of Your IT Strategy?

[fa icon="calendar'] Aug 15, 2014 5:50:22 AM / by Jim Bugwadia posted in Business

In 2002, VMware introduced their Type 1 hypervisor which made server virtualization mainstream and eventually a requirement for all enterprise IT organizations. Although cost savings are often cited as a driver, virtualization became a big deal for businesses as it allows continuous IT services. Using virtualization, IT departments could now offer zero-downtime services, at scale, and on commodity hardware.

In 2014, Docker, Inc. released Docker 1.0. Docker provides efficient image management for linux containers, and provides a standard interface that can be used to solve several problems with application delivery and management.

Much like VMware made virtualization mainstream, Docker is rapidly making containerization mainstream. In this post I will discuss four reasons why you should consider making containerization part of your business strategy.

Continuous Delivery of Software

Virtualization enabled the automation and standardization of infrastructure services. Containerization enables the automation and standardization of application delivery and management services (a.k.a. platform services).

Faster software delivery leads to faster innovation. If your business delivers software applications as part of its product offerings, the speed at which your teams can deliver new software features and bug fixes provides key competitive differentiation.

Virtualization, service catalogs, and automation tools can provide self-service, and on-demand, Virtual Machines, networks, and storage. But rapid access to Virtual Machines and infrastructure is not sufficient to deliver applications. A lot of additional tooling is required to deliver applications in a consistent and infrastructure agnostic manner.

Application Platform and Configuration Management solutions have tried to address this area, but have not succeeded en mass, as until recently there was no standard way to define application components. Docker addresses this gap, and provides a common and open building block for application automation and orchestration. This fundamentally changes how enterprises can build and deliver platform services.

Another fast growing trend is that cloud applications are being written using a Microservices architectural style, where applications are composed of multiple co-operating fine-grained services (http://bit.ly/1zPPzQH). Containers are the perfect delivery vehicle for microservices. Using this approach, your software teams can now independently version, test, and upgrade individual services. This avoids large integration and test cycles as the focus is on making incremental, but frequent, changes to the system.

Application Portability

Businesses are adopting cloud computing for infrastructure services. Public cloud providers are continuously expanding their offerings and are also constantly reducing their pricing. Some cloud providers may have better regional presence, and others may offer specialized services for certain application types. And, at a certain spend, and for some application types, private cloud remains an attractive option. For all of these reasons, it makes sense to avoid being locked in to a single cloud provider.

Containerization, allows application components to be portable to any cloud that offers base operating system that can run the container. Using containers avoids deep lock-in to a particular cloud provider, or a platform solution, and enables application runtime portability across public and private cloud.

DevOps Culture

The DevOps movement builds on Agile software development, where small incremental releases are favored to long release cycles, and the Lean Enterprise philosophy, where constant customer feedback loops are used to foster a culture of innovation.

With DevOps, developers also responsible for the operations of code. As Adrian Cockroft explains (http://slidesha.re/1v540RL), the traditional definition of “done” was when the code was released to production. Now, “done” is when the code is retired from production.

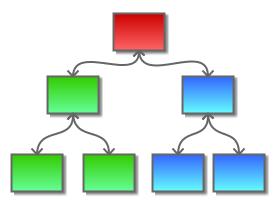

However, DevOps for a startup delivering a single web application will be very different than DevOps for an enterprise delivering several applications. In larger environments, and for more complex applications, a common platform team is required to service multiple DevOps teams.

Containerization, using Docker, provides a great separation of control across DevOps and platform concerns. A container image becomes the unit of delivery and versioning. DevOps teams can focus on building and delivering containers, and the platform team build automation around operating the containerized applications across public and private clouds, as well as shared services used by multiple DevOps teams.

Cost Savings

Virtualization allows several Virtual Machines to run on large physical servers, which can lead to significant consolidation and cost savings. Similarly, containerization allows several application services to run on a single virtual or physical machine, or on a large pool of virtual or physical machines.

Container orchestration solutions can provide policies to packing different types of services. This is exactly what Platform-as-a-Service (PaaS) vendors, like Heroku, have been doing under the covers. Containerization orchestration tools, that are built on open technologies like Docker, can now make this transparent to end users, and pass along the cost savings to their users.

Current Challenges

Recently, perhaps influenced by the buzz around Docker, Google announced ( http://bit.ly/UY030m) that all of their applications, from Search to Gmail, run in Linux Containers. However, Google and others have spent several years building and fine-tuning platforms and tools around containers, and until recently have treated these tools as a competitive advantage.

For mainstream adoption of containerization, better general purpose container orchestration and management tools are required. Application networking and security also remain areas of key development. Finally, the options for non-Linux applications are currently limited.

Summary

Infrastructure virtualization enabled continuous IT services. Containerization enables continuous application delivery.

Containerization also enables application portability, and can be a key architectural building block for cloud native applications. Once an application is containerized, the containers can be run on a pool of virtual or physical machines, or on Infrastructure-as-a-Service based public clouds.

For new applications, packaging the application components as containers should be strongly considered. Just as with virtualization, the list of reasons why not to containerize are already rapidly shrinking. Another case where containerization can help, is to transform traditional applications that now need to be delivered as software-as-a-service (http://bit.ly/1pVaeeK).

If your business delivers software, you can leverage containerization to develop and operate software more efficiently and in a highly automated fashion across public and private clouds.

Are Containers Part of Your IT Strategy?

[fa icon="calendar'] Aug 15, 2014 5:50:22 AM / by Jim Bugwadia posted in cloud applications, Containers, Business, microservices

In 2002, VMware introduced their Type 1 hypervisor which made server virtualization mainstream and eventually a requirement for all enterprise IT organizations. Although cost savings are often cited as a driver, virtualization became a big deal for businesses as it allows continuous IT services. Using virtualization, IT departments could now offer zero-downtime services, at scale, and on commodity hardware.

In 2014, Docker, Inc. released Docker 1.0. Docker provides efficient image management for linux containers, and provides a standard interface that can be used to solve several problems with application delivery and management.

Much like VMware made virtualization mainstream, Docker is rapidly making containerization mainstream. In this post I will discuss four reasons why you should consider making containerization part of your business strategy.

Continuous Delivery of Software

Virtualization enabled the automation and standardization of infrastructure services. Containerization enables the automation and standardization of application delivery and management services (a.k.a. platform services).

Faster software delivery leads to faster innovation. If your business delivers software applications as part of its product offerings, the speed at which your teams can deliver new software features and bug fixes provides key competitive differentiation.

Virtualization, service catalogs, and automation tools can provide self-service, and on-demand, Virtual Machines, networks, and storage. But rapid access to Virtual Machines and infrastructure is not sufficient to deliver applications. A lot of additional tooling is required to deliver applications in a consistent and infrastructure agnostic manner.

Application Platform and Configuration Management solutions have tried to address this area, but have not succeeded en mass, as until recently there was no standard way to define application components. Docker addresses this gap, and provides a common and open building block for application automation and orchestration. This fundamentally changes how enterprises can build and deliver platform services.

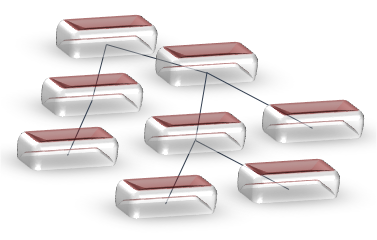

Another fast growing trend is that cloud applications are being written using a Microservices architectural style, where applications are composed of multiple co-operating fine-grained services (http://bit.ly/1zPPzQH). Containers are the perfect delivery vehicle for microservices. Using this approach, your software teams can now independently version, test, and upgrade individual services. This avoids large integration and test cycles as the focus is on making incremental, but frequent, changes to the system.

Application Portability

Businesses are adopting cloud computing for infrastructure services. Public cloud providers are continuously expanding their offerings and are also constantly reducing their pricing. Some cloud providers may have better regional presence, and others may offer specialized services for certain application types. And, at a certain spend, and for some application types, private cloud remains an attractive option. For all of these reasons, it makes sense to avoid being locked in to a single cloud provider.

Containerization, allows application components to be portable to any cloud that offers base operating system that can run the container. Using containers avoids deep lock-in to a particular cloud provider, or a platform solution, and enables application runtime portability across public and private cloud.

DevOps Culture

The DevOps movement builds on Agile software development, where small incremental releases are favored to long release cycles, and the Lean Enterprise philosophy, where constant customer feedback loops are used to foster a culture of innovation.

With DevOps, developers also responsible for the operations of code. As Adrian Cockroft explains (http://slidesha.re/1v540RL), the traditional definition of “done” was when the code was released to production. Now, “done” is when the code is retired from production.

However, DevOps for a startup delivering a single web application will be very different than DevOps for an enterprise delivering several applications. In larger environments, and for more complex applications, a common platform team is required to service multiple DevOps teams.

Containerization, using Docker, provides a great separation of control across DevOps and platform concerns. A container image becomes the unit of delivery and versioning. DevOps teams can focus on building and delivering containers, and the platform team build automation around operating the containerized applications across public and private clouds, as well as shared services used by multiple DevOps teams.

Cost Savings

Virtualization allows several Virtual Machines to run on large physical servers, which can lead to significant consolidation and cost savings. Similarly, containerization allows several application services to run on a single virtual or physical machine, or on a large pool of virtual or physical machines.

Container orchestration solutions can provide policies to packing different types of services. This is exactly what Platform-as-a-Service (PaaS) vendors, like Heroku, have been doing under the covers. Containerization orchestration tools, that are built on open technologies like Docker, can now make this transparent to end users, and pass along the cost savings to their users.

Current Challenges

Recently, perhaps influenced by the buzz around Docker, Google announced ( http://bit.ly/UY030m) that all of their applications, from Search to Gmail, run in Linux Containers. However, Google and others have spent several years building and fine-tuning platforms and tools around containers, and until recently have treated these tools as a competitive advantage.

For mainstream adoption of containerization, better general purpose container orchestration and management tools are required. Application networking and security also remain areas of key development. Finally, the options for non-Linux applications are currently limited.

Summary

Infrastructure virtualization enabled continuous IT services. Containerization enables continuous application delivery.

Containerization also enables application portability, and can be a key architectural building block for cloud native applications. Once an application is containerized, the containers can be run on a pool of virtual or physical machines, or on Infrastructure-as-a-Service based public clouds.

For new applications, packaging the application components as containers should be strongly considered. Just as with virtualization, the list of reasons why not to containerize are already rapidly shrinking. Another case where containerization can help, is to transform traditional applications that now need to be delivered as software-as-a-service (http://bit.ly/1pVaeeK).

If your business delivers software, you can leverage containerization to develop and operate software more efficiently and in a highly automated fashion across public and private clouds.

Cloud native software: Microservices

[fa icon="calendar'] Jul 25, 2014 5:10:47 AM / by Jim Bugwadia posted in Engineering

Cloud native software: Microservices

[fa icon="calendar'] Jul 25, 2014 5:10:47 AM / by Jim Bugwadia posted in microservices, Engineering

Cloud native software: key characteristics

[fa icon="calendar'] May 20, 2014 5:00:55 AM / by Jim Bugwadia posted in Engineering

Cloud native software: key characteristics

[fa icon="calendar'] May 20, 2014 5:00:55 AM / by Jim Bugwadia posted in cloud applications, microservices, Engineering, Cloud Architecture

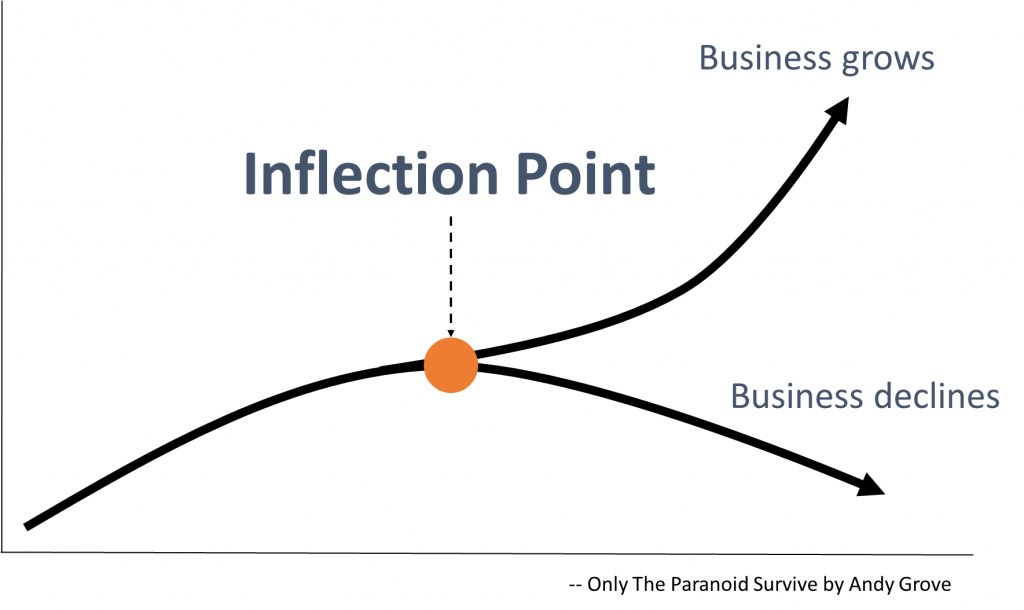

The Inflection Point in Enterprise Software

[fa icon="calendar'] Mar 13, 2014 2:07:37 AM / by Jim Bugwadia posted in Business

Enterprise software is at a major inflection point and how businesses act now will determine their future.

For the last few decades, enterprise software development has evolved around the client-server compute paradigm, and product delivery models where customers are responsible for software maintenance and operations.

Cloud is now the new compute paradigm, and cloud computing impacts how enterprise software is built, sold, and managed. With the cloud-based consumption model, vendors are now responsible for ongoing software maintenance and operations. Cloud computing has also led to solutions that increasingly blur the distinction between “non-tech” products and smart technology-enabled solutions, which is making software delivery a core competency for every business.

Businesses who understand these changes, and are able to embrace cloud-native software development, will succeed. Lets discuss each of these three topics in more detail:

Cloud is the new computing paradigm

Over the last few years Enterprise IT has rapidly transitioned away from building and operating static, expensive, data centers. The initial shift was to virtualize network, compute, and storage and move towards software defined data centers. But once that change occurred within organizations, it became a no-brainer to take the next step and fully adopt cloud computing. With cloud computing, enterprise IT is now delivered “as a service”. Business units interact with IT using web based self-service tools and expect rapid delivery of services.

“...cloud computing is set to become mainstream computing, period” -- Joe McKendrick’s single prediction for 2014

Cloud computing is ideal for product and software development. The key driver here is not cost savings, but business agility. Most software and IT projects fail due to inadequate requirements based on what might have worked before, a poor understanding of customer needs, and lack of data on actual adoption and usage. Cloud computing lets small teams run business experiments faster, and without large capital investments. This enables the Lean Enterprise “build-measure-learn” mindset for product development.

However, software built for the cloud is very different than software designed for traditional static data centers. Monolithic tiered systems, and integrated applications, do not do very well in the cloud. The pioneers who have fully embraced cloud computing, have also evolved to a new software architecture. This is the first driver for the inflection point in enterprise software.

Most of today's applications, and all of tomorrow's, are built with the cloud in mind. That means yesterday's infrastructure -- and accompanying assumptions about resource allocation, cost and development -- simply won't do.

-- Bernard Golden, The New Cloud Application Design Paradigm,

Consumption economics is here to stay

In their engaging book “Consumption Economics”, the authors provide compelling insights into how cloud computing and managed services are changing enterprise IT business models.

In the past, the risk of implementation for any large and complex IT project has been mostly on customer. Even when the customer engages with a vendor’s professional services team, or a system integrator, the customer pays the bill regardless of the project’s outcome.

Enterprise IT customers have also been trained to spend large amounts on the initial purchase of products, and typically pay 10-20% for annual maintenance. IT vendors have been able to mostly pass the burden of system integration, operations and maintenance, including managing upgrades and scalability, to their customers.

The advances in network availability and speed, and the rise of the internet, led to hosted service models. But it was the financial crisis in the last decade that forced vendors to aggressively compete for shrinking IT budgets. During this time, customers could not afford the risks of large IT projects with low success rates, and turned to an “as a service” delivery model. This transition not only replaced expensive CAPEX budgets with lower costs OPEX budgets, but also moved all the risk of implementation & delivery to the enterprise IT vendors.

What this means for businesses selling into the enterprise, is that they now need to invest in building systems that makes it easier, and cost effective, to operate and manage software at scale and for multiple tenants. The businesses who get good at this, will have a significant advantage over those who try and shoehorn existing systems into the cloud.

This is the second driver for the inflection point in enterprise software.

Every business is now a software business

Many businesses provide software as a part, or the entirety, of their product offering. It is clear that these businesses need to deliver software, better and faster, to win.

However, another major transition that is occurring is that software is redefining every business, even those who were previously thought of as “non-tech” companies. This is why in late 2013 Monsanto, an agriculture company, bought a weather prediction software company founded by two former Google employees in 2006. This is why GE has established a new Global Software division, located in San Ramon, California, and has invested millions in Pivotal a platform-as-a-service company. This is also why every major retailer now has a Silicon Valley office.

Businesses who can build software faster, will win. This is the third driver for the inflection point in enterprise software.

Nike’s FuelBand is both a device and a collaboration solution (that’s why Under Armour bought MapMyFitness). Siemens Medical’s MRI machines are both a camera (of sorts) and a content management system Heck, even a Citibank credit card is both a payment tool and an online financial application. Any company that is embracing the age of the customer is quickly learning that you can’t do that without software. -- James Staten, Forrestor

What you can do

Today, for most businesses a cloud strategy is all about delivering core IT services like compute, network, and storage faster to their business teams. This is an important first step, but not enough.

As a business, your end goal is to deliver products and services faster. This translates to being able to run business experiments efficiently, and being able to develop and operate software faster, at scale, and for multiple tenants.

To do this, your business needs to adopt a strategy to embrace cloud-native software. This means a move away from developing integrated, monolithic, 3-tiered software systems, that have served us well in the client-server era, and towards composable cloud-native applications. Like with any paradigm shift you can start with an pilot project, learn, and grow from there.

In this post, I mentioned cloud-native software a few times but did not discuss what exactly that is. While that is rapidly evolving, there common patterns and best-practices in place. In my next post, I will discuss some of these and how you can transform current software to cloud native.

References

- Joe McKendrik, My One Big Fat Cloud Computing Prediction for 2014, http://www.forbes.com/sites/joemckendrick/2013/12/19/my-one-big-fat-cloud-computing-prediction-for-2014/

- Bernard Golden, http://www.cio.com/article/746597/The_New_Cloud_Application_Design_Paradigm

- Consumption Economics: The New Rules of Tech, http://www.amazon.com/Consumption-Economics-The-Rules-Tech/dp/0984213031

- James Staten, Forrestor, http://blogs.forrester.com/james_staten/14-03-17-how_is_an_earthquake_triggered_in_silicon_valley_turning_your_company_into_a_software_vendor

The Inflection Point in Enterprise Software

[fa icon="calendar'] Mar 13, 2014 2:07:37 AM / by Jim Bugwadia posted in Continuous Delivery, Business, Cloud Architecture

Enterprise software is at a major inflection point and how businesses act now will determine their future.

For the last few decades, enterprise software development has evolved around the client-server compute paradigm, and product delivery models where customers are responsible for software maintenance and operations.

Cloud is now the new compute paradigm, and cloud computing impacts how enterprise software is built, sold, and managed. With the cloud-based consumption model, vendors are now responsible for ongoing software maintenance and operations. Cloud computing has also led to solutions that increasingly blur the distinction between “non-tech” products and smart technology-enabled solutions, which is making software delivery a core competency for every business.

Businesses who understand these changes, and are able to embrace cloud-native software development, will succeed. Lets discuss each of these three topics in more detail:

Cloud is the new computing paradigm

Over the last few years Enterprise IT has rapidly transitioned away from building and operating static, expensive, data centers. The initial shift was to virtualize network, compute, and storage and move towards software defined data centers. But once that change occurred within organizations, it became a no-brainer to take the next step and fully adopt cloud computing. With cloud computing, enterprise IT is now delivered “as a service”. Business units interact with IT using web based self-service tools and expect rapid delivery of services.

“...cloud computing is set to become mainstream computing, period” -- Joe McKendrick’s single prediction for 2014

Cloud computing is ideal for product and software development. The key driver here is not cost savings, but business agility. Most software and IT projects fail due to inadequate requirements based on what might have worked before, a poor understanding of customer needs, and lack of data on actual adoption and usage. Cloud computing lets small teams run business experiments faster, and without large capital investments. This enables the Lean Enterprise “build-measure-learn” mindset for product development.

However, software built for the cloud is very different than software designed for traditional static data centers. Monolithic tiered systems, and integrated applications, do not do very well in the cloud. The pioneers who have fully embraced cloud computing, have also evolved to a new software architecture. This is the first driver for the inflection point in enterprise software.

Most of today's applications, and all of tomorrow's, are built with the cloud in mind. That means yesterday's infrastructure -- and accompanying assumptions about resource allocation, cost and development -- simply won't do.

-- Bernard Golden, The New Cloud Application Design Paradigm,

Consumption economics is here to stay

In their engaging book “Consumption Economics”, the authors provide compelling insights into how cloud computing and managed services are changing enterprise IT business models.

In the past, the risk of implementation for any large and complex IT project has been mostly on customer. Even when the customer engages with a vendor’s professional services team, or a system integrator, the customer pays the bill regardless of the project’s outcome.

Enterprise IT customers have also been trained to spend large amounts on the initial purchase of products, and typically pay 10-20% for annual maintenance. IT vendors have been able to mostly pass the burden of system integration, operations and maintenance, including managing upgrades and scalability, to their customers.

The advances in network availability and speed, and the rise of the internet, led to hosted service models. But it was the financial crisis in the last decade that forced vendors to aggressively compete for shrinking IT budgets. During this time, customers could not afford the risks of large IT projects with low success rates, and turned to an “as a service” delivery model. This transition not only replaced expensive CAPEX budgets with lower costs OPEX budgets, but also moved all the risk of implementation & delivery to the enterprise IT vendors.

What this means for businesses selling into the enterprise, is that they now need to invest in building systems that makes it easier, and cost effective, to operate and manage software at scale and for multiple tenants. The businesses who get good at this, will have a significant advantage over those who try and shoehorn existing systems into the cloud.

This is the second driver for the inflection point in enterprise software.

Every business is now a software business

Many businesses provide software as a part, or the entirety, of their product offering. It is clear that these businesses need to deliver software, better and faster, to win.

However, another major transition that is occurring is that software is redefining every business, even those who were previously thought of as “non-tech” companies. This is why in late 2013 Monsanto, an agriculture company, bought a weather prediction software company founded by two former Google employees in 2006. This is why GE has established a new Global Software division, located in San Ramon, California, and has invested millions in Pivotal a platform-as-a-service company. This is also why every major retailer now has a Silicon Valley office.

Businesses who can build software faster, will win. This is the third driver for the inflection point in enterprise software.

Nike’s FuelBand is both a device and a collaboration solution (that’s why Under Armour bought MapMyFitness). Siemens Medical’s MRI machines are both a camera (of sorts) and a content management system Heck, even a Citibank credit card is both a payment tool and an online financial application. Any company that is embracing the age of the customer is quickly learning that you can’t do that without software. -- James Staten, Forrestor

What you can do

Today, for most businesses a cloud strategy is all about delivering core IT services like compute, network, and storage faster to their business teams. This is an important first step, but not enough.

As a business, your end goal is to deliver products and services faster. This translates to being able to run business experiments efficiently, and being able to develop and operate software faster, at scale, and for multiple tenants.

To do this, your business needs to adopt a strategy to embrace cloud-native software. This means a move away from developing integrated, monolithic, 3-tiered software systems, that have served us well in the client-server era, and towards composable cloud-native applications. Like with any paradigm shift you can start with an pilot project, learn, and grow from there.

In this post, I mentioned cloud-native software a few times but did not discuss what exactly that is. While that is rapidly evolving, there common patterns and best-practices in place. In my next post, I will discuss some of these and how you can transform current software to cloud native.

References

- Joe McKendrik, My One Big Fat Cloud Computing Prediction for 2014, http://www.forbes.com/sites/joemckendrick/2013/12/19/my-one-big-fat-cloud-computing-prediction-for-2014/

- Bernard Golden, http://www.cio.com/article/746597/The_New_Cloud_Application_Design_Paradigm

- Consumption Economics: The New Rules of Tech, http://www.amazon.com/Consumption-Economics-The-Rules-Tech/dp/0984213031

- James Staten, Forrestor, http://blogs.forrester.com/james_staten/14-03-17-how_is_an_earthquake_triggered_in_silicon_valley_turning_your_company_into_a_software_vendor